Magento 2 AWS Autoscaling Cluster with Terraform

This Infrastructure is the result of years of experience scaling Magento 1 and 2 in the cloud. It comes with the best cloud development practices baked right in saving your business time and money.

Migrating Magento into the AWS cloud — comes with many advantages. You can operate workloads in new ways. When you only pay for what you use and add resources within minutes using auto-scaling.

Leverage your own AWS Account dramatically reduces your monthly spend instead of paying to an expensive managed hosting provider (PaaS, SaaS).

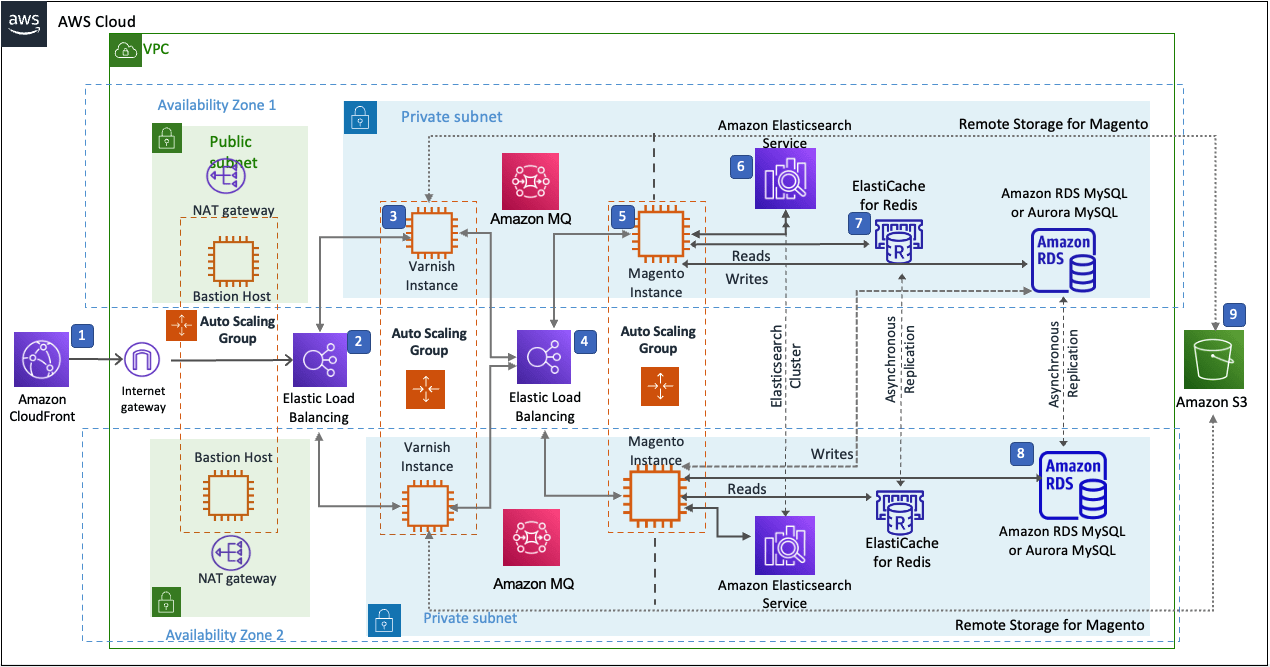

Architecture

- Amazon CloudFront is deployed as a content delivery network (CDN). CloudFront speeds up distribution of static and dynamic web content.

- First Elastic Load Balancing (Application Load Balancer) distributes traffic across Varnish instances in an AWS Auto Scaling group in multiple Availability Zones.

- Varnish deployed on Amazon EC2, Varnish Cache is a web application accelerator caching HTTP reverse proxy. Balancer distributes traffic from Varnish Cache across the AWS Auto Scaling group of Magento instances in multiple Availability Zones.

- Second Elastic Load Balancing (Application Load Balancer) distributes traffic from Varnish Cache across the AWS Auto Scaling group of Magento instances in multiple Availability Zones.

- Magento web server on Amazon EC2 instances launched in the private subnets.

- Amazon OpenSearch Service for Magento catalog search.

- An Amazon ElastiCache cluster with the Redis cache engine launched in the private subnets.

- Either an Amazon RDS for MySQL or an Amazon Aurora database engine deployed via Amazon RDS in the first private subnet. If you choose Multi-AZ deployment, a synchronously replicated secondary database is deployed in the second private subnet. This provides high availability and built-in automated failover from the primary database.

- Amazon S3 created as remote storage for web server instances to store shared media files.

- Amazon MQ (optional) is a message broker that offers a reliable, highly available, scalable, and portable messaging system. The Message Queue Framework (MQF) is a system that allows a module to publish messages to queues for Magento flow. It also defines the consumers that will receive the messages asynchronously. Bulk operations are actions that are performed on a large scale. Example bulk operations tasks include importing or exporting items, changing prices on a mass scale, and assigning products to a warehouse. For each individual task of a bulk operation, the system creates a message that is published in a message queue and processed by background consumer runs.

Magento Open Source components

This Quick Start deploys Magento Open Source (2.4.3) with the following prerequisite software:

- Operating system: Amazon Linux x86-64 or Debian

- Web server: NGINX

- Database: Amazon RDS for MySQL 5.6 or Amazon Aurora 5.7

- Programming language: PHP 7.4, including the required extensions

- Message broker: Amazon 3.8.11

- Database Cache: Amazon ElastiCache Redis 6.x

- Page Cache: Varnish 6.5

- Content Catalog Search: Amazon OpenSearch Service 7.10

Deployments Steps

The procedure for deploying a Magento cluster on AWS consists of the following steps. For detailed instructions, follow the links for each step.

Step 1. Prepare an AWS account

If you don’t already have an AWS account, create one at https://aws.amazon.com by following the on-screen instructions. Part of the sign-up process involves receiving a phone call and entering a PIN using the phone keypad.

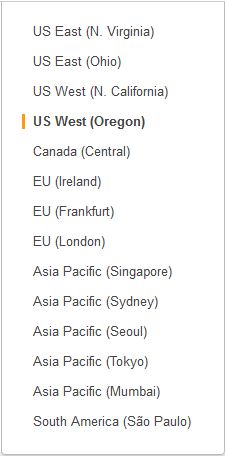

Use the Region selector in the navigation bar to choose the AWS Region where you want to deploy the Magento cluster on AWS. For more information, see Regions and Availability Zones. Regions are dispersed and located in separate geographic areas. Each Region includes at least two Availability Zones that are isolated from one another but connected through low-latency links.

Important

This Quick Start uses Amazon Aurora, which might not be available in all AWS Regions. Before you launch this Quick Start, check the Region table for availability.

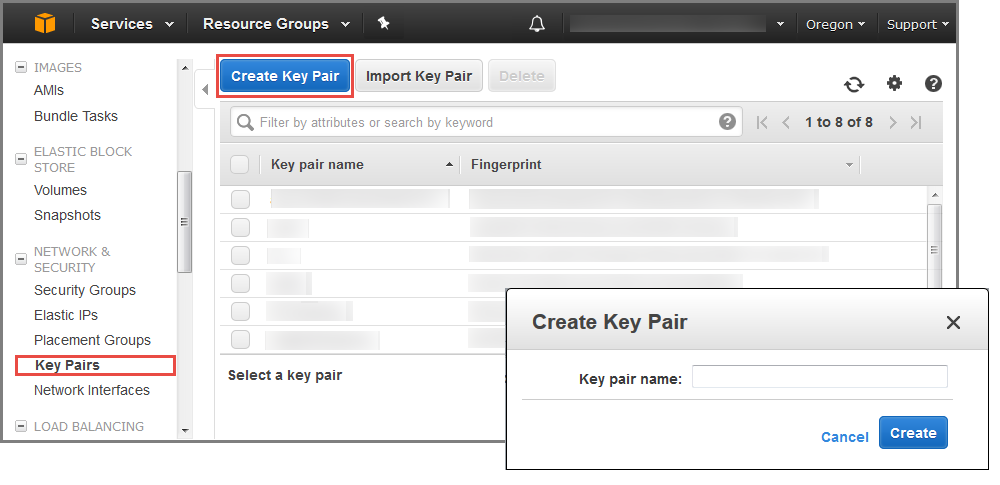

Create a key pair in your preferred Region. In the navigation pane of the Amazon EC2 console, choose Key Pairs, Create Key Pair, type a name, and then choose Create.

Amazon EC2 uses public-key cryptography to encrypt and decrypt login information. To be able to log into your instances, you must create a key pair. On Linux, we use the key pair to authenticate SSH login.

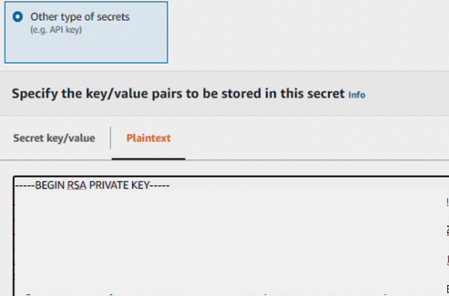

For this deployment, store the private key you created in the previous step in Secrets Manager using the AWS Management Console as plaintext.

- In the AWS Management Console, navigate to AWS Secrets Manager, choose your AWS Region, and choose Store a new secret.

- Select Other type of secrets and choose Plaintext.

- Clear the {“”:””} JSON format from the Plaintext section.

- Copy and paste your private key.

- Keep the

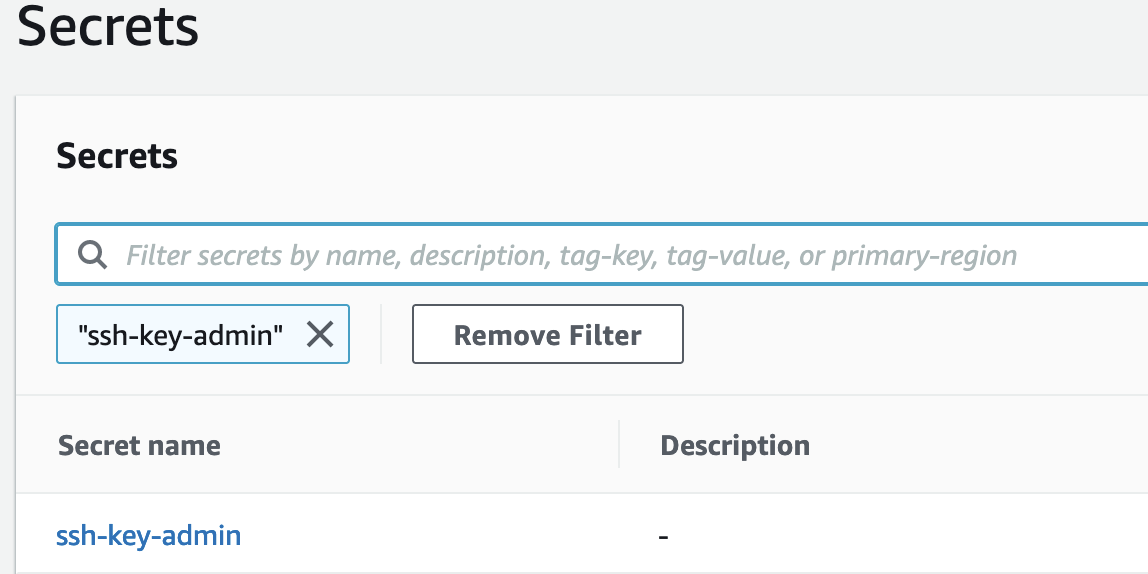

DefaultEncryptionKeyto encrypt your SSH Key secret. Click Next. - Set the secret name as “ssh-key-admin“

- Click Next. Leave the automatic rotation to disabled. Select Next.

- Review and select Store.

If necessary, request a service quota increase for the instance types used for the deployment. You might need to request an increase if you need additional Elastic IP addresses or if you already have an existing deployment that uses the same instance types as this architecture. On the Service Quotas

console, for each instance type that you want a service quota increase, choose the instance type, choose Request quota increase, and then complete the fields in the quota increase form. It can take a few days for the new service quota to become effective.

Step 2. Create Magento keys for deployment

This deployment use Magento Composer to manage Magento components and their dependencies. To learn more about Magneto Composer, see the Adobe documentation

- Create a Magento public authentication key for Composer Username.

- Create a Magento private authentication key for Composer Password.

For detailed instructions on creating keys, see the Adobe documentation

Step 3. Set up Terraform and a Terraform Cloud account

- Install Terraform. For installation steps, see the Terraform documentation

- Set up a Terraform Cloud account. For setup instructions, see the Terraform Cloud documentation (There is a free tier available.)

- Create a workspace in Terraform to organize infrastructure. For setup instructions, see the TerraForm Cloud workspace documentation

Step 4. Prepare local environment with Terraform setup

Generate a Terraform Cloud token

terraform loginExport the TERRAFORM_CONFIG variable

export TERRAFORM_CONFIG="$HOME/.terraform.d/credentials.tfrc.json"Configure the tfvars file

Create terraform.tfvars in the following path

$HOME/.aws/terraform.tfvars An example of the tfvars file contents

AWS_ACCESS_KEY_ID = "{insert access key ID}"

AWS_SECRET_ACCESS_KEY = "{insert secret access key}"

AWS_SESSION_TOKEN = "{insert session token}"Note

We recommend using AWS Security Token Service (AWS STS)–based credentials. Before deployment, you must create both an AWS key pair and a Magento deployment key.

Deploy the module (Linux and iOS)

- Clone the repository from GitHub.

- Navigate to the repository’s root directory.

- Navigate to the

setup_workspacedirectorycd setup_workspace - Run the following commands in order

terraform init - Or alternatively, for the previous command, specify the file

terraform apply -var-file="$HOME/.aws/terraform.tfvars" - You are asked for the following

- The AWS Region where you want to deploy this module. This must match the Region where you generated the key pair.

- The organization under which Terraform Cloud runs. This can be found in the Terraform Cloud console.

- Setup confirmation.

- Navigate to the directory, and deploy Magento (the previous

terraform initcommand generatesbackend.hcl)cd ../deploy - Open, edit, and review all of the variables in the

variables.tffile. - Update the

default=value for your deployment.- The

description=value provides additional context for each variable. The following items must be edited before deployment - Project-specific:

domain_name - Magento information:

mage_composer_username - Magento information:

mage_composer_password - Magento information:

magento_admin_password - Magento information:

magento_admin_email - Database:

magento_database_password - Variable

base_ami_os: Useamazon_linux_2orDebian_10. - Variable

use_aurora: If you are using Amazon RDS for MySQL instead of Amazon Aurora, change tofalse.

- The

- After you review, update and save the

./deploy/variables.tffile, see the Deployment section.

Run the following commands from an IDE or terminal with Terraform installed.

- Initialize the environment

terraform init - Verify that the deployed architecture is correct

terraform plan - Validate the code

terraform validate - Deploy the infrastructure run one of the following commands

terraform applyOrterraform apply -var-file="$HOME/.aws/terraform.tfvars"

Test The Deployment

After Terraform completes, it outputs the frontend and backend URLs. Use the credentials specified in the variables.tf file to log in as an administrator. Run the following command to connect to the web node

ssh -i PATH_TO_GENERATED_KEY -J admin@BASTION_PUBLIC_IP magento@WEB_NODE_PRIVATE_IPClean up the infrastructure

If you want to retain the Magento files stored in your Amazon S3 bucket, copy and save the bucket’s objects before completing this step.

When you no longer need the infrastructure, run one of the following commands to remove it

terraform destroy

terraform destroy -var-file="$HOME/.aws/terraform.tfvarsAfter you remove the infrastructure, the database is stored as an artifact.