What you must know about Kubernetes and Docker before deployment

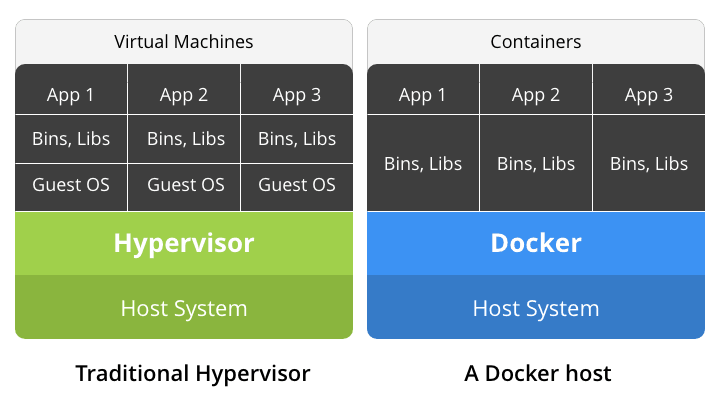

Docker and Kubernetes may appear to be akin as both allow running applications within Linux containers. But in actual, both the technologies operate at various layers of the stack; however, you can use these technologies together. While Docker is a platform for containerization, Kubernetes is an orchestrator for Docker.

Containers help developers maintain consistency across different platforms, from application development through to the production process. Developers can have more control over the application in comparison to the conventional virtualization techniques. It is accomplished by isolation, which occurs at the kernel level without any client operating system. Further, it results in higher scalability, faster deployment, and adjacent consistency among various development platforms.

Docker and the rise of containerization

Talking about the Docker is impractical without first explaining the platform of the container. Though things appear simple at the time of writing code, the complexity arises while transferring this code to the production environment. The reason behind this common issue is that the code that works well on the developer’s machine fails to respond in production. It could be due to different libraries, different dependencies, and operating systems.

Containers resolve the portability issues by separating the code from the underlying environment it runs over. Developers can bring all the bins, libraries, and other elements in one place into a container image. This container image is accessible on any computer in the production environment with a containerization platform.

Overview of Docker Architecture

The Docker works on a client-server architecture. Docker Engine and its components allow the user to build, assemble, ship, and execute applications. The components of the Docker Engine include Docker Daemon, REST API, and Command Line Interface.

Docker client and Docker Daemon (Server) runs on the same system, and it is possible to connect the Docker client to remote Docker Daemon. REST API instructs the Server (Docker Daemon) through CLI about the functions it performs.

Docker Daemon: Docker Daemon works as per API requests and manages the Docker objects such as the containers, volumes, images, and networks accordingly. For managing the Docker services, a daemon can communicate to peer daemons.

The Docker Client: Docker client (Docker) is the primary route for interaction between Docker users and the Docker and can communicate with multiple daemons. When user inputs command such as ‘docker run,’ the client transfers it to ‘dockerd’ (Daemon), which carries the command further.

Docker Registries: Docker registry is proprietary storage for Docker images. The default public registry for Docker is Docker Hub. Users are free to run their private registry, though. On entering ‘docker run’ or ‘docker pull’ command, it retrieves the required images from the registry that the Docker currently configures.

Docker Objects: The elements that you create while using Docker such as the images, networks, plugins, containers, volumes, etc. refer to as Docker Objects.

Kubernetes and container orchestration

Kubernetes is an open-source orchestration tool designed to build application services with the capability to stretch over various containers. Also, it allows scheduling containers across a cluster, scaling containers as well as managing the health of the containers over a regular interval of time.

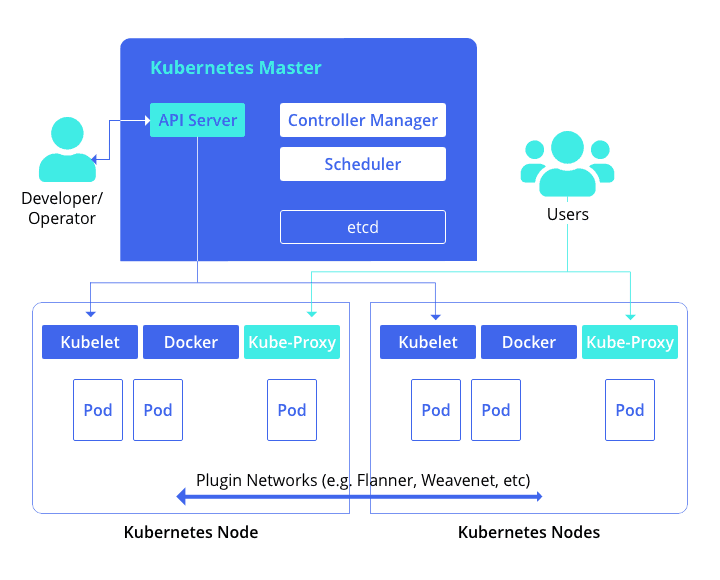

Overview of Kubernetes Architecture

The two fundamental concepts associated with Kubernetes clusters include the Node and the Pod. The node is a term used for bare metal servers and virtual machines that Kubernetes manages. The word ‘Pod’ is a unit of deployment and a collection of associated Docker containers that coexist together.

On Kubernetes Master node, we have:

Kube-Controller Manager: It listens to Kube API Server to retrieve information about the current state of the cluster. It takes into account the existing state of the Kubernetes clusters and makes decisions on achieving the required state after that.

Kube-API Server: It is the API server that unveils the Kubernetes’ levers and gears. Command-line utility (CLI) and WebUI dashboards use Kube-apiserver. Human operators use these utilities for interacting with the Kubernetes clusters.

Kube-Scheduler: It decides how it arranges the jobs and events across the cluster. The scheduling depends upon the availability of resources, guidelines, policies, and permissions set by the operator, etc. Similar to Kube-controller-manager, Kube-scheduler too listens to the Kube-Episerver to acquire information about the state of the cluster.

etcd: etcd is the storage stack for the master node, and developers use it for storing definitions, policies, state of the system, secrets, etc.

On Kubernetes worker node we have:

Kubelet: It executes instructions given by the master node and transfers node health-related information back to the master node.

Kube-proxy: It uses various services of the application to communicate with each other across the cluster. It can also introduce your product to the world if you instruct it to do so. Kube-proxy allows the pod to relay the message to each other across the network.

Docker: Each node possesses the Docker engine for managing the containers.

Kubernetes vs Docker: Head-To-Head Comparisons

| Kubernetes | Docker | |

|---|---|---|

| Installation and cluster configuration | Complex installation, but once set up completes, it results in a vigorous cluster output. | Simple to install and setup; however, the resultant cluster is comparatively weaker. |

| Scalability | Highly scalable service with 5000 node clusters and 150,000 pods. | High scalability with as much as five times scalable than the Kubernetes with 1000 node clusters and 30,000 containers. |

| High Availability | Since it performs health checks directly on the pods, it is highly available. | High availability. On encountering a host failure, it restarts the containers on the new hosts. |

| Load balancing | Sometimes, it requires manual load balancing to balance traffic between containers in different pods. | Autoload balancing capabilities to balance traffic between various containers in a specific cluster. |

| Container updates and rollbacks | In-place upgrades of the cluster with automatic rollbacks. | In-place upgrades of clusters with automatic rollback feature in Docker 17.04 and higher. |

| Data volumes | Data volumes are contemplated to enable the containers to share information within the particular pod. | Data volumes are the directories that the Docker shares within a single or multiple containers. |

| Networking | Use flannel for accomplishing networking where containers are inter-related in a virtual network. | It forms a multiple-host access network overlay that develops uniformity among the containers running on multiple cluster nodes. |

| Service discovery | It depends on ‘etcd,’ and manually defined services that are easily discoverable. | Services is perceptible throughout the network of clusters. |

Kubernetes vs Docker: Myth breaking facts

Kubernetes can run without Docker, and Docker can work without Kubernetes. But they function efficiently and can build an excellent cloud infrastructure when deployed together.

Can you use Docker without Kubernetes?

In the development environment, Docker is commonly used without Kubernetes. The advantages of using container orchestrator like Kubernetes fails to counterbalance the cost of additional complexities in the production environments.

Can you use Kubernetes without Docker?

Kubernetes is a container orchestrator; container runtime is essential for Kubernetes to orchestrate. Kubernetes is normally used with Docker; however, it can be deployed with any container runtime such as cri-o or RunC.

Why should you consider moving to Kubernetes?

Since organizations across the globe are moving to cloud architectures that use containers, a trusted and tested platform is the need of the hour. Several significant reasons are compelling the organizations to move to Kubernetes that includes:

- Kubernetes is a Platform-as-a-Service (PaaS) platform that helps businesses to move faster.

- A better resource utilization than virtual machines (VMs) and hypervisors; it is considered a cost-efficient solution for organizations.

- It runs on Microsoft Azure, Amazon Web Services, and Google Cloud Platform, as well as on-premises, which makes it a cloud-agnostic solution highly recommended by today’s businesses.

On the other side, Docker incorporates several drawbacks that make it a less feasible option among large business setups.

- Absence of storage option

- No support for health checks

- Poor monitoring capabilities

- Command-line interface

- Complex manual cluster deployment

Final Words

Despite numerous similarities and differences, it is not easy to explain these two containerization platforms accurately. Though Docker is an easy and simple solution to work with, Kubernetes brings along a lot of complexities.

Docker is an ideal solution for organizations where fast and effortless deployment is required, whereas Kubernetes is highly applicable for production environments where complex applications are running over large clusters.

Source: techaheadcorp